Table of Contents

ToggleIntroduction

Event streaming platforms like Apache Kafka have gained a lot of importance with the proliferation of data businesses. As customers look for responsive experiences when they interact with companies, real-time decisions based on events are becoming more and more crucial. Suppose a customer shopping online for a smartphone finds that the model he wants to buy is not in stock and if the phone manufacturing company gets this update a week later, it doesn’t do any good to their business. This is where platforms like Kafka come into play. Distributed streaming platforms, such as Kafka, Google Cloud Dataflow, Microsoft Azure, and Amazon Kinesis, handle large volumes of real-time data and help organizations future-proof their applications. In this blog, let’s learn more about Kafka and its uses.

Don't miss out on your chance to work with the best!

Apply for top job opportunities today!

Why is event streaming important?

Event stream processing (ESP) technologies like Kafka help businesses capture insights and take prompt actions on live data. Here are the top benefits of event streaming:

- ESP platforms analyze and aggregate data in real time.

- It is possible to act on past trends as ESP technologies also hold onto historical data.

- ESP platforms don’t have to reach out to external data sources.

- ESP platforms can process single points of data as they happen.

- ESP-equipped systems can react to changes, such as a sudden increase in load.

- ESP-enabled systems are flexible and can scale according to the demand.

- Unlike batch systems, ESP streaming systems are more resilient.

What is Kafka?

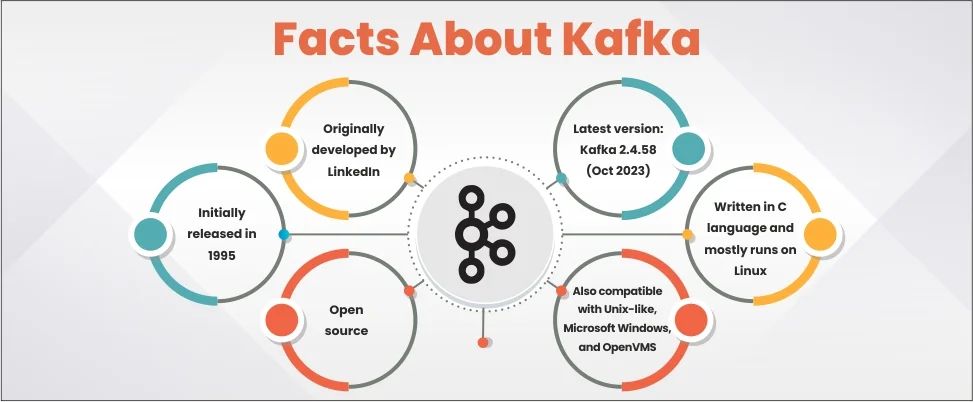

Kafka is a data streaming platform developed originally by LinkedIn and later donated to Apache Software Foundation. It was initially released in 1995, and the latest version, 2.4.58, was released in October 2023. Kafka is written in C, and most of it runs on Linux. However, the current versions also run on Unix-like, Microsoft Windows, and OpenVMS. Many business technologies need applications to stream thousands of sources of data in real time. Kafka is an open-sourced distributed streaming platform that can optimally handle large volumes of real-time data.

What Does Kafka Do?

Although Kafka works like a standard pub-sub message queue like RabbitMQ, here are a few things that Kafka does differently.

- Kafka is a modern distributed system that can scale to handle numerous applications because of its ability to run as a cluster.

- While most message queues remove messages after a consumer confirms receipt, Kafka can store data as long as necessary.

- Kafka handles datasets dynamically as well as computing derived streams and stream processing.

How does Apache Kafka Work?

As we know, Kafka lets you subscribe and publish to record streams, store the streams chronologically, and process these streams in real–time. To understand how Kafka works, one must know how its elements — producer, cluster, topic, partition, and consumer — work.

Elements of Kafka

Producer

This is a client application that forwards events into topics. Kafka takes in real-time data from multiple producer systems and apps. These can be IoT applications, logging systems, and monitoring systems.

Cluster

This refers to the servers (known as brokers) that operate Apache Kafka.

Topic

Kafka stores events as topics. Topics can be categorized as compacted and regular. When records are stored as regular topics, they can be configured in such a way that old data can be deleted to free up storage. Compacted record topics, on the other hand, do not expire. They update older messages with similar keys. Unless deleted by the user, Apache Kafka does not delete the latest message.

Partition

The collected data needs a mechanism to reach multiple brokers. Partitions help Apache Kafka send across message streams in a safe manner. Kafka indexes and stores messages together with a timestamp. It also distributes the partitions in a node cluster and replicates them to multiple servers.

Consumer

These are client applications reading and processing the events received from the partitions. The Apache Kafka Streams API allows writing Java applications that pull data from Topics and write results back to Apache Kafka. External stream processing systems such as Apache Spark, Apache Apex, Apache Flink, Apache NiFi and Apache Storm can also be applied to these message streams.

Apache Kafka: Key Features and Benefits

Scalable

Kafka’s ability to divide topics into many partitions makes it possible to balance the load on the servers. Users can adjust the production as per their requirements. Kafka’s scalability also allows clusters to be spread across geographic regions or availability zones.

Fast

Kafka can decouple data streams, which allows it to send messages using server clusters with latency as low as 2ms.

Durable

Kafka protects against server failures as it can store data streams in a fault-tolerant cluster. Additionally, it also offers intra-cluster replication by persisting the messages to disk.

Real-Time Analysis

Organizations using Kafka are able to do real-time analytics. Kafka allows the process and analysis of data streams as events occur. This feature also provides insights into current trends and patterns.

Commit Log

Kafka’s durability and fault tolerance make it possible for it to be used as a commit log. This helps in ensuring data integrity and preventing data loss.

What is Kafka Used for?

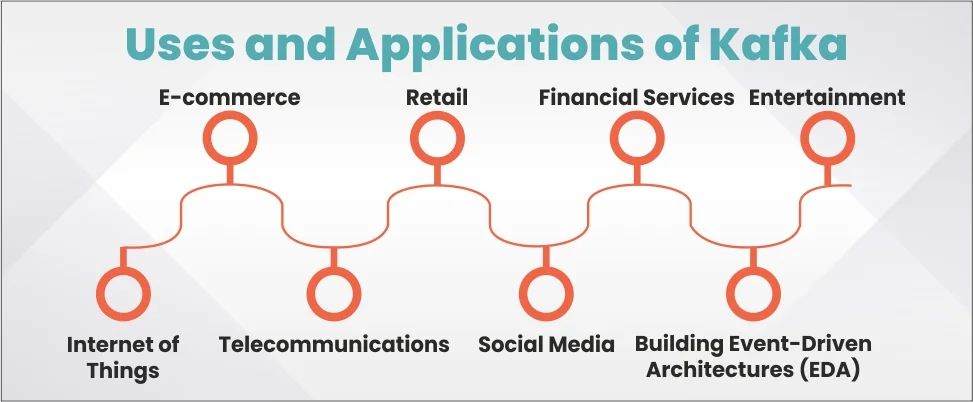

E-commerce

Suppose a customer is trying to order an AC, but the model he wants to purchase runs out of stock. Let’s say another customer is only browsing for furniture using different keywords. And this customer has purchased dining room furniture sets in the past. E-commerce websites can use Kafka to track customer behavior. It helps companies track what products customers are searching for, which pages they view, and what they have purchased in the past.

Retail

The retail sector is highly competitive with thin margins. Many retail companies move from physical shops to online channels. In either case, personalized customer experience is crucial. Kafka helps retail businesses create new business models, provide a great customer experience, and improve operational efficiencies. For instance, a retail chain like Walmart would have innumerable events per day. How does Kafka help a Walmart store? It has a real-time inventory system that offers thousands of nodes to help Walmart process the millions of events that occur every day.

Financial Services

Global financial services have to track billions of transactions per day. Earlier, financial firms had to collect data from businesses, then send it to a vast data lake, and then run analytics on the captured data. With Kafka, it is possible for the financial analyst, for example, someone working at Goldman Sachs, to analyze how the market is functioning, as Kafka offers real-time analysis.

Internet of Things (IoT)

IoT devices and infrastructures are everywhere today. And these services need access to live data from all over the world. Apache Kafka can manage millions of data points from IoT devices in real time. For example, imagine a city crosswalk where traffic lights adjust in real time based on traffic. Or wearable devices that need real-time monitoring of heart rates and blood sugar levels. Kafka helps IoT devices get all the data instantly.

Entertainment

Kafka allows entertainment platforms like Netflix to route and store messages through streamlined architecture. Netflix can manage unified event publishing, collection, and routing for both batch and stream processing with the help of Kafka.

Telecommunications

The Telco industry also requires infrastructures that help companies process real-time data at scale. Metadata from phone records is processed and analyzed to monitor the infrastructure and sell context-specific personalized services to customers. Event streaming platforms like Kafka offer telecommunication companies the core infrastructure for such integration and application.

Social Media

Social media platforms like LinkedIn and X handle billions of messages per day. Moreover, a platform like X also tracks news in real time. Both platforms can benefit from using Kafka for real-time data streaming and analysis. Kafka lets LinkedIn use various clusters for different applications. This helps avoid clashing or failure of one application, which would lead to harm to the other applications in the cluster.

Building Event-Driven Architectures (EDA)

EDAs use events to trigger and communicate changes between different components of a system. How Kafka helps here is that it acts as a scalable and durable event bus for communication between event-driven microservices. It functions as the event broker and event log, offers decoupling services, and offers reliability with fault tolerance.

Conclusion

Apache Kafka is a popular open-source stream-processing software that helps its users collect, process, store, and analyze data at scale. It is known for its great performance, fault tolerance, and high throughput. Moreover, Apache Kafka can handle thousands of messages per second. It is a trusted tool used across industries, enabling organizations – from entertainment to social media and from healthcare to telecom – to modernize their data strategies with event streaming architecture.

Take control of your career and land your dream job!

Sign up and start applying to the best opportunities!

FAQs

Kafka is an open-source software that gives a framework for storing, reading and analyzing streaming data.

Kafka is used to develop real-time streaming data pipelines and applications that adapt to the data streams.

Yes, it is a free, open-source message broker. Apache Kafka provides high throughput, high availability, and low latency.

If you are using Kafka’s Streams API, it might need a few more additional coding guidelines.

Python developers can use Kafka-Python, PyKafka, and Confluent Kafka Python to interface with Kafka broker services.